Predict rating of review using BoardGameGeek Reviews dataset

The goal of this project is to use the corpus of reviews present in this dataset, learn the reviews and their corresponding rating.

Once the model is trained using the review data, we ask the user to input a new review and predict the rating of that review.

We begin by importing all the basic libraries:

1!pip install -q pandas numpy nltk scikit-learn matplotlib seaborn wordcloud ipython

1import random2import gc3import re4import string5import pickle6import os7import warnings8warnings.simplefilter(action='ignore', category=FutureWarning)910import numpy as np11import pandas as pd12from IPython.display import display, HTML1314from sklearn.metrics import confusion_matrix, classification_report, accuracy_score15from sklearn.model_selection import train_test_split, KFold16from sklearn.naive_bayes import MultinomialNB17from sklearn.linear_model import LogisticRegression, LinearRegression18from sklearn.feature_extraction.text import TfidfVectorizer1920import seaborn as sns21from matplotlib import pyplot as plt2223import nltk24from nltk.corpus import stopwords25nltk.download(['words', 'stopwords', 'wordnet'], quiet=True)

1True

1from google.colab import drive2drive.mount('/content/drive')

1Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount("/content/drive", force_remount=True).

Here we define the paths of the dataset and the pickle files

We will be pickling our dataset after every major step due to save time

Due to the sheer amount of data we have, it does take time to do pre-processing

Also, I will be invoking the garbage collector often just to save memory as we need every bit of it due to the volume of input data

1DATA_DIR = "/content/drive/My Drive/Colab Notebooks/data/"23DATASET = DATA_DIR + "bgg-13m-reviews.csv"45REVIEWS_PICKLE = DATA_DIR + "reviews.pkl"6REVIEWS_DATA_CHANGED = False78CLEAN_TEXT_PICKLE = DATA_DIR + "clean_text.pkl"9LEMMATIZED_PICKLE = DATA_DIR + "lemmatized.pkl"10STOPWORDS_PICKLE = DATA_DIR + "stopwords.pkl"11CLEANED_PICKLE = DATA_DIR + "cleaned_reviews.pkl"1213MODEL_PICKLE = DATA_DIR + "model.pkl"14VOCABULARY_PICKLE = DATA_DIR + "vocab.pkl"

Loading the data

Now that we've got the formalities out of our way, let's start exploring our dataset and see how we can solve this problem.

First, we need to load the dataset like so:

1reviews = pd.DataFrame()23if os.path.isfile(REVIEWS_PICKLE) or REVIEWS_DATA_CHANGED:4 print('Pickled reviews found. Loading from pickle file.')5 reviews = pd.read_pickle(REVIEWS_PICKLE, compression="gzip")6else:7 print("Reading reviews csv")8 reviews = pd.read_csv(DATASET, usecols=['comment', 'rating'])9 print("Pickling dataset")10 reviews.to_pickle(REVIEWS_PICKLE, compression="gzip")1112reviews = reviews.reindex(columns=['comment', 'rating'])1314reviews = reviews.sample(frac=1, random_state=3).reset_index(drop=True)1516gc.collect()17display(reviews)

1Pickled reviews found. Loading from pickle file.

| comment | rating | |

|---|---|---|

| 0 | NaN | 6.5 |

| 1 | NaN | 8.0 |

| 2 | NaN | 8.0 |

| 3 | NaN | 7.0 |

| 4 | http://boardgamers.ro/saboteur-recenzie/ | 6.0 |

| ... | ... | ... |

| 13170068 | "Solid deck-builder in which you need to strat... | 6.5 |

| 13170069 | I adore this game. Not only is it an incredibl... | 10.0 |

| 13170070 | NaN | 9.0 |

| 13170071 | NaN | 6.0 |

| 13170072 | Still the easiest game to get to the table. Es... | 6.0 |

13170073 rows × 2 columns

Dropping rows with empty reviews

Great! We've got our dataset loaded.

Also, consecutive runs should be faster because we've pickled the data

As we can see, we've got several rows with no review and just a rating

We will be removing this rows as we cannot use them

1empty_reviews = reviews.comment.isna().sum()2print("Dropping {} rows from dataset".format(empty_reviews))34reviews.dropna(subset=['comment'], inplace=True)5reviews = reviews.reset_index(drop=True)67print("We now have {} reviews remaining".format(reviews.shape[0]))89gc.collect()10display(reviews)

1Dropping 10531901 rows from dataset2We now have 2638172 reviews remaining

| comment | rating | |

|---|---|---|

| 0 | http://boardgamers.ro/saboteur-recenzie/ | 6.0 |

| 1 | A nice family game but a little light for my o... | 5.0 |

| 2 | "This was the first modern board game that my ... | 8.0 |

| 3 | Great two player game. In fact, how did Knizia... | 9.0 |

| 4 | Risk but with more pieces. | 5.0 |

| ... | ... | ... |

| 2638167 | Great light game. Theme and simplicity make it... | 7.0 |

| 2638168 | Detailed board All sleeved | 9.0 |

| 2638169 | "Solid deck-builder in which you need to strat... | 6.5 |

| 2638170 | I adore this game. Not only is it an incredibl... | 10.0 |

| 2638171 | Still the easiest game to get to the table. Es... | 6.0 |

2638172 rows × 2 columns

Cleaning the reviews

Wow! That was a huge difference. We've shed off about 10 million rows!

Now, we need to begin cleaning up these reviews as they have a lot of redundant information and context.

For this dataset, we will be removing the following patterns from the text:

- Words less than 3 and more than 15 characters

- Special characters

- URLs

- HTML tags

- All kinds of redundant whitespaces

- Words that have numbers between them

- Words that begin or end with a number

Once all this is done, we tokenize the reviews and save them in the dataframe

1def clean_text(text):2 text = text.lower().strip()3 text = " ".join([w for w in text.split() if len(w) >= 3 and len(w) <= 15])4 text = re.sub('\[.*?\]', '', text)5 text = re.sub('https?://\S+|www\.\S+', '', text)6 text = re.sub('<.*?>+', '', text)7 text = re.sub('[%s]' % re.escape(string.punctuation), '', text)8 text = re.sub('\n', '', text)9 text = re.sub('\w+\d+\w*', '', text)10 text = re.sub('\d+\w+\d*', '', text)11 text = re.sub('\W+', '', text)12 text = tokenizer.tokenize(text)13 return text141516if os.path.isfile(CLEAN_TEXT_PICKLE) or REVIEWS_DATA_CHANGED:17 print("Pickled clean text found. Loading from pickle file.")18 reviews = pd.read_pickle(CLEAN_TEXT_PICKLE, compression="gzip")19else:20 print("Cleaning reviews")21 tokenizer = nltk.tokenize.RegexpTokenizer(r'\w+')22 reviews['comment'] = reviews['comment'].apply(clean_text)23 print("Pickling cleaned dataset")24 reviews.to_pickle(CLEAN_TEXT_PICKLE, compression="gzip")2526gc.collect()27display(reviews)

1Pickled clean text found. Loading from pickle file.

| comment | rating | |

|---|---|---|

| 0 | [currently, this, sits, list, favorite, game] | 10.0 |

| 1 | [know, says, how, many, plays, but, many, many... | 10.0 |

| 2 | [will, never, tire, this, game, awesome] | 10.0 |

| 3 | [this, probably, the, best, game, ever, played... | 10.0 |

| 4 | [fantastic, game, got, hooked, games, all, ove... | 10.0 |

| ... | ... | ... |

| 2638167 | [horrible, party, game, dumping, this, one] | 3.0 |

| 2638168 | [difficult, build, anything, all, with, the, i... | 3.0 |

| 2638169 | [lego, created, version, pictionary, only, you... | 3.0 |

| 2638170 | [this, game, very, similar, creationary, comes... | 2.5 |

| 2638171 | [this, game, was, really, bad, worst, that, pl... | 2.0 |

2638172 rows × 2 columns

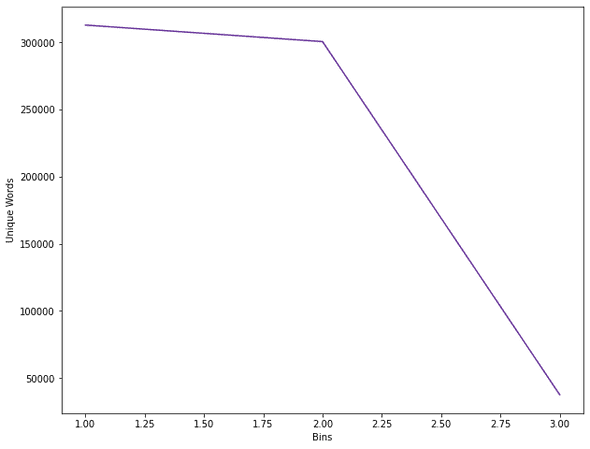

Calculating unique words

We've gotten rid of most of the oddities in our text data.

But, when it comes to such huge models text is present in many forms of speech so it's better to lemmatize them. But, before we do that, let's see how many unique words we currently have.

1uniq_words = []23unique_words = reviews.explode('comment').comment.nunique()4uniq_words.append(unique_words)56print("Unique words before lemmatizing {}".format(unique_words))

1Unique words before lemmatizing 313013

Lemmatizing

Wow, that's a lot of words. Let's see what happens after lemmatizing our reviews.

1def lemmatize_data(text):2 return [lemmatizer.lemmatize(w) for w in text]345if os.path.isfile(LEMMATIZED_PICKLE) or REVIEWS_DATA_CHANGED:6 print("Pickled lemmatized reviews found. Loading from pickle file.")7 reviews = pd.read_pickle(LEMMATIZED_PICKLE, compression="gzip")8else:9 print("Lemmatizing reviews")10 lemmatizer = nltk.stem.WordNetLemmatizer()11 reviews['comment'] = reviews['comment'].apply(lemmatize_data)12 print("Pickling lemmatized reviews")13 reviews.to_pickle(LEMMATIZED_PICKLE, compression="gzip")1415display(reviews)16gc.collect()1718unique_words = reviews.explode('comment').comment.nunique()19uniq_words.append(unique_words)20print("Unique words after lemmatizing {}".format(unique_words))

1Pickled lemmatized reviews found. Loading from pickle file.

| comment | rating | |

|---|---|---|

| 0 | [currently, this, sits, list, favorite, game] | 10.0 |

| 1 | [know, say, how, many, play, but, many, many, ... | 10.0 |

| 2 | [will, never, tire, this, game, awesome] | 10.0 |

| 3 | [this, probably, the, best, game, ever, played... | 10.0 |

| 4 | [fantastic, game, got, hooked, game, all, over... | 10.0 |

| ... | ... | ... |

| 2638167 | [horrible, party, game, dumping, this, one] | 3.0 |

| 2638168 | [difficult, build, anything, all, with, the, i... | 3.0 |

| 2638169 | [lego, created, version, pictionary, only, you... | 3.0 |

| 2638170 | [this, game, very, similar, creationary, come,... | 2.5 |

| 2638171 | [this, game, wa, really, bad, worst, that, pla... | 2.0 |

2638172 rows × 2 columns

1Unique words after lemmatizing 300678

Removing stopwords and non-english words

Now let's remove the stopwords as well as they are mostly redundant for our training and mainly help us understand context.

We do have quite a fair number of custom words to remove and I've identified some custom words that I've added to the list as well.

1def remove_stopwords(text):2 words = [3 w for w in text if w not in stop_words and w in words_corpus or not w.isalpha()]4 words = list(filter(lambda word: len(word) > 2, set(words)))5 return words678if os.path.isfile(STOPWORDS_PICKLE) or REVIEWS_DATA_CHANGED:9 print("Reading stopwords pickle")10 reviews = pd.read_pickle(STOPWORDS_PICKLE, compression="gzip")11else:12 words_corpus = set(nltk.corpus.words.words())1314 stop_words_json = {"en": ["a", "a's", "able", "about", "above", "according", "accordingly", "across", "actually", "after", "afterwards", "again", "against", "ain't", "aint", "all", "allow", "allows", "almost", "alone", "along", "already", "also", "although", "always", "am", "among", "amongst", "an", "and", "another", "any", "anybody", "anyhow", "anyone", "anything", "anyway", "anyways", "anywhere", "apart", "appear", "appreciate", "appropriate", "are", "aren't", "arent", "around", "as", "aside", "ask", "asking", "associated", "at", "available", "away", "awfully", "b", "be", "became", "because", "become", "becomes", "becoming", "been", "before", "beforehand", "behind", "being", "believe", "below", "beside", "besides", "best", "better", "between", "beyond", "both", "brief", "but", "by", "c", "c'mon", "cmon", "cs", "c's", "came", "can", "can't", "cannot", "cant", "cause", "causes", "certain", "certainly", "changes", "clearly", "co", "com", "come", "comes", "concerning", "consequently", "consider", "considering", "contain", "containing", "contains", "corresponding", "could", "couldn't", "course", "currently", "d", "definitely", "described", "despite", "did", "didn't", "different", "do", "does", "doesn't", "doesn", "doing", "don't", "done", "down", "downwards", "during", "e", "each", "edu", "eg", "eight", "either", "else", "elsewhere", "enough", "entirely", "especially", "et", "etc", "even", "ever", "every", "everybody", "everyone", "everything", "everywhere", "ex", "exactly", "example", "except", "f", "far", "few", "fifth", "first", "five", "followed", "following", "follows", "for", "former", "formerly", "forth", "four", "from", "further", "furthermore", "g", "get", "gets", "getting", "given", "gives", "go", "goes", "going", "gone", "got", "gotten", "greetings", "h", "had", "hadn't", "hadnt", "happens", "hardly", "has", "hasn't", "hasnt", "have", "haven't", "havent", "having", "he", "he's", "hes", "hello", "help", "hence", "her", "here", "here's", "heres", "hereafter", "hereby", "herein", "hereupon", "hers", "herself", "hi", "him", "himself", "his", "hither", "hopefully", "how", "howbeit", "however", "i", "i'd", "id", "i'll", "i'm", "im", "i've", "ive", "ie", "if", "ignored", "immediate", "in", "inasmuch", "inc", "indeed", "indicate", "indicated", "indicates", "inner", "insofar", "instead", "into", "inward", "is", "isn't", "isnt", "it", "it'd", "itd", "it'll", "itll", "it's", "its", "itself", "j", "just", "k", "keep", "keeps", "kept", "know", "known", "knows", "l", "last", "lately", "later", "latter", "latterly", "least", "less", "lest", "let", "let's", "lets", "like", "liked", "likely", "little", "look", "looking", "looks", "ltd", "m", "mainly", "many", "may", "maybe", "me", "mean", "meanwhile", "merely", "might", "more", "moreover", "most", "mostly", "much", "must", "my", "myself", "n", "name", "namely", "nd", "near", "nearly", "necessary", "need", "needs", "neither", "never", "nevertheless", "new", "next", "nine", "no", "nobody", "non", "none", "noone", "nor", "normally", "not", "nothing", "novel", "now", "nowhere", "o", "obviously", "of", "off", "often", "oh", "ok", "okay", "old", "on", "once", "one", "ones", "only", "onto", "or", "other", "others", "otherwise", "ought", "our", "ours", "ourselves", "out", "outside", "over", "overall", "own", "p", "particular", "particularly", "per", "perhaps", "placed", "please", "plus", "possible", "presumably", "probably", "provides", "q", "que", "quite", "qv", "r", "rather", "rd", "re", "really", "reasonably", "regarding", "regardless", "regards", "relatively", "respectively", "right", "s", "said", "same", "saw", "say", "saying", "says", "second", "secondly", "see", "seeing", "seem", "seemed", "seeming", "seems", "seen", "self", "selves", "sensible", "sent", "serious", "seriously", "seven", "several", "shall", "she", "should", "shouldn't", "shouldnt", "since", "six", "so", "some", "somebody", "somehow", "someone", "something", "sometime", "sometimes", "somewhat", "somewhere", "soon", "sorry", "specified", "specify", "specifying", "still", "sub", "such", "sup", "sure", "t", "t's", "ts", "take", "taken", "tell", "tends", "th", "than", "thank", "thanks", "thanx", "that", "that's", "thats", "the", "their", "theirs", "them", "themselves", "then", "thence", "there", "there's", "theres", "thereafter", "thereby", "therefore", "therein", "theres", "thereupon", "these", "they", "they'd", "theyd", "they'll", "theyll", "they're", "theyre", "theyve", "they've", "think", "third", "this", "thorough", "thoroughly", "those", "though", "three", "through", "throughout", "thru", "thus", "to", "together", "too", "took", "toward", "towards", "tried", "tries", "truly", "try", "trying", "twice", "two", "u", "un", "under", "unfortunately", "unless", "unlikely", "until", "unto", "up", "upon", "us", "use", "used", "useful", "uses", "using", "usually", "uucp", "v", "value", "various", "very", "via", "viz", "vs", "w", "wa", "want", "wants", "was", "wasn't", "wasnt", "way", "we", "we'd", "we'll", "we're", "we've", "weve", "welcome", "well", "went", "were", "weren't", "werent", "what", "what's", "whats", "whatever", "when", "whence", "whenever", "where", "wheres", "where's", "whereafter", "whereas", "whereby", "wherein", "whereupon", "wherever", "whether", "which", "while", "whither", "who", "who's", "whos", "whoever", "whole", "whom", "whose", "why", "will", "willing", "wish", "with", "within", "without", "won't", "wont", "wonder", "would", "wouldn't", "wouldny", "x", "y", "yes", "yet", "you", "you'd", "youd", "you'll", "youll", "you're", "youre", "you've", "youve", "your", "yours", "yours", "yourself", "yourselves", "z", "zero"]}1516 stop_words_json_en = set(stop_words_json['en'])1718 stop_words_nltk_en = set(stopwords.words('english'))1920 custom_stop_words = ["rating", "ish", "havn", "dice", "end", "set", "doesnt", "give", "find", "doe", "system", "tile", "table", "deck", "box", "made", "part", "based", "worker", "wife", "put", "havent", "game", "play", "player", "one", "two", "card", "ha", "wa", "dont", "board", "time", "make", "rule", "thing", "version", "mechanic", "year", "theme", "rating", "family", "child", "money", "edition", "collection", "piece", "wasnt", "didnt"]2122 stop_words = stop_words_nltk_en.union(23 stop_words_json_en, custom_stop_words)2425 print("Removing {} stopwords from text".format(len(stop_words)))26 print()2728 reviews['comment'] = reviews['comment'].apply(remove_stopwords)2930 print("Pickling stopwords data")31 reviews.to_pickle(STOPWORDS_PICKLE, compression="gzip")3233gc.collect()3435print("After removing stopwords:")36display(reviews)

1Reading stopwords pickle2After removing stopwords:

| comment | rating | |

|---|---|---|

| 0 | [list, favorite] | 10.0 |

| 1 | [uncounted] | 10.0 |

| 2 | [tire, awesome] | 10.0 |

| 3 | [negotiation, skill, thinking] | 10.0 |

| 4 | [fantastic, hooked] | 10.0 |

| ... | ... | ... |

| 2638167 | [party, horrible, dumping] | 3.0 |

| 2638168 | [difficult, included, build] | 3.0 |

| 2638169 | [create, abstract, fun, limited, number, pictu... | 3.0 |

| 2638170 | [similar, creationary] | 2.5 |

| 2638171 | [bad, worst, genre] | 2.0 |

2638172 rows × 2 columns

Let's see what the word count is now

1unique_words = reviews.explode('comment').comment.nunique()2uniq_words.append(unique_words)3print(unique_words)

137633

Wow! That a marked difference considering what we started off with. This will definitely help us during our training phase as it reduces training time and computations as well.

Moreover, this helps improve our accuracy as we will be targeting important keywords that contribute to a particular sentiment.

Let's see what the wordcloud looks like now.

1x = [1, 2, 3]2y = [313013, 300678, 37633]34fig, ax = plt.subplots(figsize=(10, 8))5ax.plot(x, y, color="#663399")6ax.set_ylabel('Unique Words')7ax.set_xlabel('Bins')8plt.show()

Finishing up and saving progress

In the process of all this cleaning, there would be several rows that have become empty.

Let's remove them and then pickle our dataset so that we don't have to do all this cleaning again.

1def drop_empty_reviews(df):2 df = df.drop(df[~df.comment.astype(bool)].index)3 return df456if os.path.isfile(CLEANED_PICKLE) or REVIEWS_DATA_CHANGED:7 print("Reading cleaned reviews pickle")8 reviews = pd.read_pickle(CLEANED_PICKLE, compression="gzip")9else:10 reviews = drop_empty_reviews(reviews)11 print("Pickling cleaned reviews data")12 reviews.to_pickle(CLEANED_PICKLE, compression="gzip")1314REVIEWS_DATA_CHANGED = False1516gc.collect()1718display(reviews)

1Reading cleaned reviews pickle

| comment | rating | |

|---|---|---|

| 0 | [list, favorite] | 10.0 |

| 1 | [uncounted] | 10.0 |

| 2 | [tire, awesome] | 10.0 |

| 3 | [negotiation, skill, thinking] | 10.0 |

| 4 | [fantastic, hooked] | 10.0 |

| ... | ... | ... |

| 2638167 | [party, horrible, dumping] | 3.0 |

| 2638168 | [difficult, included, build] | 3.0 |

| 2638169 | [create, abstract, fun, limited, number, pictu... | 3.0 |

| 2638170 | [similar, creationary] | 2.5 |

| 2638171 | [bad, worst, genre] | 2.0 |

2405729 rows × 2 columns

Exploring our dataset

Now that we've completed our cleaning, we need to assess what kind of model we should use to predict our ratings.

But first, let's see how many distinct values we have in the ratings column.

1reviews.rating.nunique()

13213

We've got 3k+ unique values. This would definitely help us in a Regression model.

But, since we will be predicting single digit ratings, I'm going to round off the column. This will allow us to perform both regression and classification and choose what give's us a better result.

Do note that rounding off generally isn't recommended as it leads to loss of information and will affect the outcome.

I'm only doing this due to computational constraints. You should see better results in Regression models without rounding the values.

1reviews.rating = reviews.rating.round().astype(int)23reviews

| comment | rating | |

|---|---|---|

| 0 | [list, favorite] | 10 |

| 1 | [uncounted] | 10 |

| 2 | [tire, awesome] | 10 |

| 3 | [negotiation, skill, thinking] | 10 |

| 4 | [fantastic, hooked] | 10 |

| ... | ... | ... |

| 2638167 | [party, horrible, dumping] | 3 |

| 2638168 | [difficult, included, build] | 3 |

| 2638169 | [create, abstract, fun, limited, number, pictu... | 3 |

| 2638170 | [similar, creationary] | 2 |

| 2638171 | [bad, worst, genre] | 2 |

2405729 rows × 2 columns

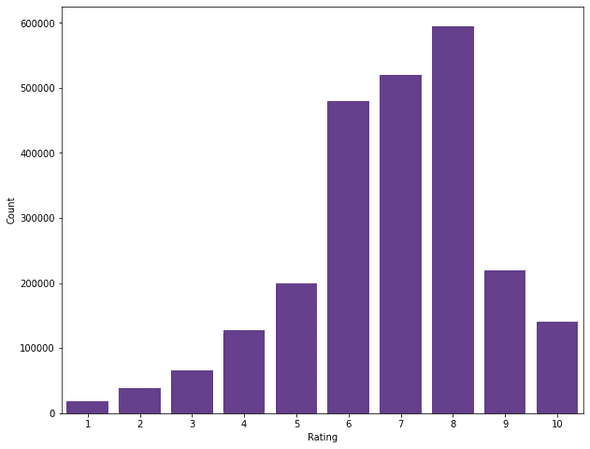

We should have 11 distinct values (0 to 10) after this. Let's see how how many ratings of each category we have.

1reviews.rating.value_counts()

18 59514527 52044236 48015349 21942955 200143610 14003774 12708583 6646692 38184101 18634110 1112Name: rating, dtype: int64

We can see that we have less than 50 reviews with a zero rating. Their contribution is fairly negligible so we're going to remove them.

1class_distrib = reviews.rating.value_counts(ascending=True)2classes_to_drop = class_distrib[class_distrib < 50].index.values34for rating in classes_to_drop:5 rows_to_drop = reviews[reviews.rating == rating].index.to_list()6 reviews.drop(rows_to_drop, inplace=True)7 print("We have {} reviews remaining".format(reviews.shape))

1We have (2405718, 2) reviews remaining

Let's visualize the distribution of ratings a bit better by using a histogram

1fig, ax = plt.subplots(figsize=(10, 8))2sns.barplot(reviews.rating.value_counts().index,3 reviews.rating.value_counts(), color="#663399")4ax.set_xlabel("Rating")5ax.set_ylabel("Count")6fig.show()

Splitting dataset for training, validation and testing

Looks like the data is following somewhat of a normal distribution. Most of the reviews lie between 6 to 9. This will definitely bias the model as the dataset is imbalanced.

Now that we have a vague idea about or course of action, let's split the dataset for training and testing. We will be using around 10% of this data mainly due to the volume and computational constraints.

1X_train, X_test, y_train, y_test = train_test_split(2 reviews.comment, reviews.rating, train_size=0.1, test_size=0.1)3X_dev, X_test, y_dev, y_test = train_test_split(X_test, y_test, train_size=0.5)45X_train = X_train.apply(' '.join)6X_dev = X_dev.apply(' '.join)7X_test = X_test.apply(' '.join)89gc.collect()1011print("Number of records chosen for training: {}".format(X_train.shape[0]))12print("Number of records chosen for development: {}".format(X_dev.shape[0]))13print("Number of records chosen for testing: {}".format(X_test.shape[0]))

1Number of records chosen for training: 2405712Number of records chosen for development: 1202863Number of records chosen for testing: 120286

Great! We've got that sorted. Since we have 10 unique values in ratings and we're dealing with text, let's start off with a Multinomial Naive Bayes Classifier.

But, before we do that, we need to calculate the TF-IDF values for our reviews.

TF is the term-frequency for every word in the review i.e. the number of times a word has appeared in a review. This is fairly straightforward to calculate by using a counter per review.

IDF (Inverse Document Frequency) is slightly more trickier. Essentially, IDF is the weight of a word across all reviews. It is a measure of how common or rare a word is across the corpus. This helps us understand which words need to be prioritized over others. The closer IDF is to 0, the more common it is and the lesser it will contribute to the model. We need to target words that have a weight closer to 1 as they contribute the most.

This can be calculated by taking the total number of reviews, dividing it by the number of reviews that contain a word, and calculating the logarithm just to balance large numbers that can arise. This is what the final formula looks like:

$tfidf{i,d} = tf{i,d} \cdot idf_{i}$

1vectorizer = TfidfVectorizer()23X_train_vec = vectorizer.fit_transform(X_train)45X_dev_vec = vectorizer.transform(X_dev)6X_test_vec = vectorizer.transform(X_test)

Selecting a model

We will be testing the following models

- Multinomial Naive Bayes (Works well with text data)

- Linear Regression (Target column is a number so maybe it will work)

- Logistic Regression (Works well with categorical data)

First let's give Naive Bayes a shot mainly due to the flexibility we get with text data. Naive Bayes methods are a set of supervised learning algorithms based on applying Bayes’ theorem with the “naive” assumption of conditional independence between every pair of features given the value of the class variable.

This is what the final classification rule looks like:

$\hat{y} = \arg\maxy P(y) \prod{i=1}^{n} P(x_i \mid y)$

1accuracy_log = []23mnbc = MultinomialNB()45mnbc.fit(X_train_vec, y_train)67mnbc_predicted = mnbc.predict(X_dev_vec)89acc_score = accuracy_score(mnbc_predicted, y_dev)10mnbc_accuracy = round(acc_score * 100, 2)11mnbc_report = classification_report(12 y_dev, mnbc_predicted, digits=4, zero_division=False)1314gc.collect()1516print("Accuracy for Multinomial Naive Bayes = {}%".format(mnbc_accuracy))17print()18accuracy_log.append(acc_score)19print("Classification Report for Multinomial Naive Bayes")20print(mnbc_report)

1Accuracy for Multinomial Naive Bayes = 29.4%23Classification Report for Multinomial Naive Bayes4 precision recall f1-score support56 1 0.0000 0.0000 0.0000 9267 2 0.0000 0.0000 0.0000 18458 3 0.0000 0.0000 0.0000 33579 4 0.3286 0.0036 0.0071 645110 5 0.1967 0.0047 0.0093 1011211 6 0.2816 0.3333 0.3053 2403312 7 0.2678 0.2212 0.2423 2599913 8 0.3073 0.7239 0.4314 2963514 9 0.2568 0.0035 0.0068 1095515 10 0.4318 0.0054 0.0108 69731617 accuracy 0.2940 12028618 macro avg 0.2071 0.1296 0.1013 12028619weighted avg 0.2724 0.2940 0.2221 120286

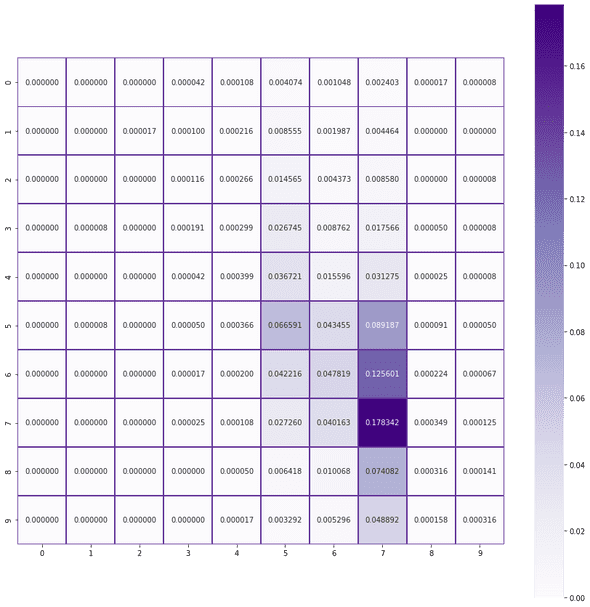

1print("Confusion Matrix for Multinomial Naive Bayes:")23mnb_cm = confusion_matrix(y_dev, mnbc_predicted, normalize='all')45fig, ax = plt.subplots(figsize=(15, 15))6sns.heatmap(mnb_cm, annot=True, linewidths=0.5, ax=ax,7 cmap="Purples", linecolor="#663399", fmt="f", square=True)8fig.show()

1Confusion Matrix for Multinomial Naive Bayes:

Let's see how well our model works for a few real-world reviews

1def check_real_review_predictions(model, vectorizer):2 real_reviews = ["This game is absolutely pathetic. Horrible story and characters. Will never play this game again.",3 "Worst.",4 "One of the best games I've ever played. Amazing story and characters. Recommended.",5 "good."]67 test_vectorizer = TfidfVectorizer(vocabulary=vectorizer.vocabulary_)8 vectorized_review = test_vectorizer.fit_transform(real_reviews)9 predicted = model.predict(vectorized_review)10 combined = np.vstack((real_reviews, predicted)).T11 return combined121314preds = check_real_review_predictions(mnbc, vectorizer)15preds

1array([['This game is absolutely pathetic. Horrible story and characters. Will never play this game again.',2 '7'],3 ['Worst.', '6'],4 ["One of the best games I've ever played. Amazing story and characters. Recommended.",5 '8'],6 ['good.', '7']], dtype='<U97')

Well, this is exactly what we feared. All our ratings are between 6 to 8.

Looks like our model is being biased by our input data. I've got a feeling that some form of regression would work slighlty better here. Let's give Linear Regression a shot.

1linreg = LinearRegression(n_jobs=4)23linreg.fit(X_train_vec, y_train)45linreg_predicted = linreg.predict(X_dev_vec)67acc_score = accuracy_score(np.round(linreg_predicted), y_dev)8linreg_accuracy_round = round(acc_score * 100, 2)910gc.collect()1112print("Accuracy for Linear Regression = {}%".format(linreg_accuracy_round))13accuracy_log.append(acc_score)

1Accuracy for Linear Regression = 27.47%

The accuracy is a little worse but let's check with some of our reviews before we dismiss this idea.

1preds = check_real_review_predictions(linreg, vectorizer)2preds

1array([['This game is absolutely pathetic. Horrible story and characters. Will never play this game again.',2 '4.437034389776194'],3 ['Worst.', '2.3588930267669355'],4 ["One of the best games I've ever played. Amazing story and characters. Recommended.",5 '9.024957161994099'],6 ['good.', '7.021012417756805']], dtype='<U97')

Perfect. Looks like we are on the right track. Since this is mainly a classification problem, Logistic Regression would be a better fit here. Let's try that:

1logreg = LogisticRegression(n_jobs=4)23logreg.fit(X_train_vec, y_train)45logreg_predicted = logreg.predict(X_dev_vec)67logreg_accuracy = round(accuracy_score(logreg_predicted, y_dev) * 100, 2)8logreg_report = classification_report(9 y_dev, logreg_predicted, digits=4, zero_division=False)1011gc.collect()1213print("Accuracy for Logistic Regression = {}%".format(logreg_accuracy))14print()15accuracy_log.append(acc_score)16print("Classification Report for Logistic Regression")17print(logreg_report)

1Accuracy for Logistic Regression = 29.94%23Classification Report for Logistic Regression4 precision recall f1-score support56 1 0.2321 0.0594 0.0946 9267 2 0.2030 0.0580 0.0902 18458 3 0.2054 0.0316 0.0547 33579 4 0.2100 0.0632 0.0972 645110 5 0.2145 0.0562 0.0890 1011211 6 0.2888 0.3937 0.3332 2403312 7 0.2679 0.2854 0.2764 2599913 8 0.3337 0.5489 0.4151 2963514 9 0.2703 0.0481 0.0817 1095515 10 0.3354 0.1565 0.2134 69731617 accuracy 0.2994 12028618 macro avg 0.2561 0.1701 0.1745 12028619weighted avg 0.2818 0.2994 0.2647 120286

While our acurracy is better, our solution hasn't fully converged. Let's increase the max iterations to a high value and see where it converges.

We can then use that and see how our model does. The default value is 100 so we will be increasing it to 1000.

1logreg = LogisticRegression(max_iter=1000, n_jobs=4)23logreg.fit(X_train_vec, y_train)45logreg_predicted = logreg.predict(X_dev_vec)67acc_score = accuracy_score(logreg_predicted, y_dev)8logreg_accuracy = round(acc_score * 100, 2)9logreg_report = classification_report(10 y_dev, logreg_predicted, digits=4, zero_division=False)1112gc.collect()1314print("Accuracy for Logistic Regression = {}%".format(logreg_accuracy))15print()16accuracy_log.append(acc_score)17print("Classification Report for Logistic Regression")18print(logreg_report)19print("Solution converged at iteration = {}".format(logreg.n_iter_[-1]))

1Accuracy for Logistic Regression = 30.08%23Classification Report for Logistic Regression4 precision recall f1-score support56 1 0.3687 0.0713 0.1195 9267 2 0.2318 0.0569 0.0914 18458 3 0.2272 0.0349 0.0604 33579 4 0.2103 0.0629 0.0969 645110 5 0.2022 0.0552 0.0867 1011211 6 0.2902 0.3916 0.3334 2403312 7 0.2717 0.2817 0.2766 2599913 8 0.3310 0.5616 0.4165 2963514 9 0.2674 0.0504 0.0848 1095515 10 0.3666 0.1428 0.2056 69731617 accuracy 0.3008 12028618 macro avg 0.2767 0.1709 0.1772 12028619weighted avg 0.2849 0.3008 0.2651 1202862021Solution converged at iteration = 637

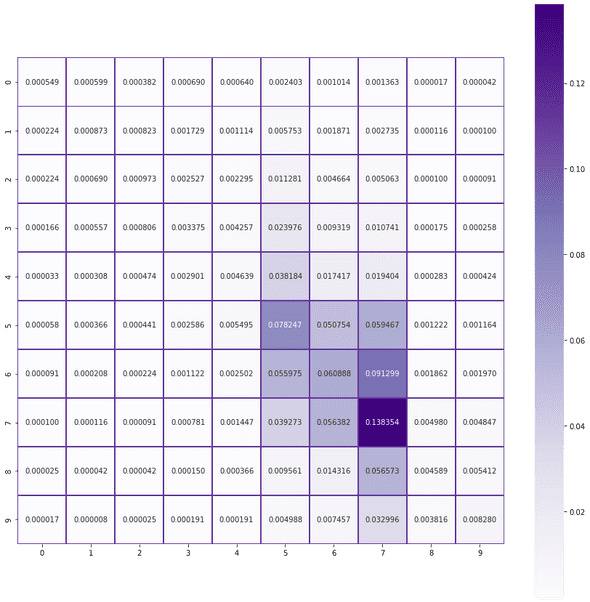

Let's have a look at the confision matrix and see if things got any better

1print("Confusion Matrix for Logistic Regression:")23logreg_cm = confusion_matrix(y_dev, logreg_predicted, normalize='all')45fig, ax = plt.subplots(figsize=(15, 15))6sns.heatmap(logreg_cm, annot=True, linewidths=0.5, ax=ax,7 cmap="Purples", linecolor="#663399", fmt="f", square=True)8fig.show()

1Confusion Matrix for Logistic Regression:

While we did lose some accuracy, our solution has converged and the confusion matrix looks slightly better. Let's see how it classifies our real world reviews.

1preds = check_real_review_predictions(logreg, vectorizer)2preds

1array([['This game is absolutely pathetic. Horrible story and characters. Will never play this game again.',2 '1'],3 ['Worst.', '1'],4 ["One of the best games I've ever played. Amazing story and characters. Recommended.",5 '10'],6 ['good.', '7']], dtype='<U97')

Perfect! These ratings correspond somewhat to what we were expecting.

But before we move ahead, we will ensure the consistency of these metrics by using Stratified K-Fold cross validation.

Since we are dealing with imbalanced data, a Stratified K-Fold is necessary to maintain the distribution of the input dataset.

1kfold = KFold(n_splits=3)2fold = 134for train_index, test_index in kfold.split(X_train, y_train):5 X_tr, X_te = X_train[X_train.index.isin(6 train_index)], X_train[X_train.index.isin(test_index)]7 y_tr, y_te = y_train[y_train.index.isin(8 train_index)], y_train[y_train.index.isin(test_index)]910 vectorizer = TfidfVectorizer()1112 X_tr_vec = vectorizer.fit_transform(X_tr)13 X_te_vec = vectorizer.transform(X_te)1415 logreg.fit(X_tr_vec, y_tr)1617 logreg_predicted = logreg.predict(X_te_vec)1819 acc_score = accuracy_score(logreg_predicted, y_te)20 logreg_accuracy = round(acc_score * 100, 2)2122 gc.collect()2324 print("Accuracy for Logistic Regression Fold {} = {}%".format(25 fold, logreg_accuracy))26 print()27 fold += 1

1Accuracy for Logistic Regression Fold 1 = 30.94%23Accuracy for Logistic Regression Fold 2 = 31.6%45Accuracy for Logistic Regression Fold 3 = 28.53%

Looks like Logistic Regression is performaing fairly well when compared to it's Linear counterpart or even Naive Bayes for that matter. Let's see how the model is performing on the unseen test data.

1logreg.fit(X_train_vec, y_train)2final_predicted = logreg.predict(X_test_vec)34acc_score = accuracy_score(final_predicted, y_test)5logreg_accuracy = round(acc_score * 100, 2)67print("Final Accuracy for test data with Logistic Regression = {}%".format(8 logreg_accuracy))

1Final Accuracy for test data with Logistic Regression = 30.16%

While this isn't the accuracy we were expecting it looks like our accuracy is platueauing. This could be due to the inherent bias in the dataset.

Due to this, I will be training the model using a around 50% of the data and pickling it so that we can use it in our online Flask application to predict any new reviews that a user can input.

1X_train, _, y_train, _ = train_test_split(2 reviews.comment, reviews.rating, train_size=0.5, test_size=0.01)3X_train = X_train.apply(' '.join)45print("Number of records chosen for training the final model = {}".format(6 X_train.shape[0]))78vectorizer = TfidfVectorizer()9X_train_vec = vectorizer.fit_transform(X_train)1011logreg.fit(X_train_vec, y_train)12gc.collect()

1Number of records chosen for training the final model = 120285923456742

We will pickle this model and the vocabulary of the TF-IDF vectorizer. This will be used in the Flask application that we will be building.

1if os.path.isfile(MODEL_PICKLE) or REVIEWS_DATA_CHANGED:2 print('Pickled model already present')3 os.remove(DATA_DIR + MODEL_PICKLE)45if os.path.isfile(VOCABULARY_PICKLE) or REVIEWS_DATA_CHANGED:6 print('Pickled vocab already present')7 os.remove(DATA_DIR + VOCABULARY_PICKLE)89print("Pickling model")10pickle.dump(logreg, open(MODEL_PICKLE, "wb"))1112print("Pickling vocabulary")13pickle.dump(vectorizer.vocabulary_, open(VOCABULARY_PICKLE, "wb"))

1Pickling model2Pickling vocabulary

Challenges faced

The biggest challenges that I faced revolved around cleaning the dataset and dealing with imbalanced data.

Every model that I tested was giving similar accuracy thus leading to the conclusion that the dataset itself was creating an implict bias.

The dataset was difficult to clean and implement models on due to the sheer volume of data and the hardware constraints.

Even while attempting to deal with imbalanced data, there was issues with accuracy because methods such as SMOTE and StratifiedKFold do not work well with text data as the features.

The other challenge I faced was deploying the model to a production environment. If I used a majority of the data to train the model, it became too heavy and every AJAX call to the server took a long time to load.

Thus, I ended up loading the model as soon as the server started and caching it in the memory thereby speeding up consecutive requests.

My contribution

Built a Flask application that predicts the rating of any review. Please visit the page here and enter a review. You should see the prediction for that review. This application has been deployed on Heroku and the form is available on my Portfolio website that is built using GatsbyJS. The code for this application is available on my GitHub.

You can also see a demo of this application on YouTube.

A copy of the dataset and it's pickle files are available here and the IPython Notebook can be downloaded from here. (Right-click and Save As to download the file)

References

Sending POST Request via Axios