We will be implementing a Naive Bayes classifier on the Large Movie Reviews dataset from Stanford AI. The classifier needs to predict the sentiment of the review being positive or negative.

We begin by importing all the necessary libraries.

1import glob2import os3import random4import re5import string67import matplotlib.pyplot as plt8import nltk9import numpy as np10import pandas as pd11import seaborn as sns1213from IPython.core.display import display, HTML14from nltk.corpus import stopwords15from wordcloud import WordCloud

1nltk.download(['words', 'stopwords'], quiet=True)23base_path = 'data/aclImdb/'4subdirs = ['pos', 'neg']5COLUMNS = ['review', 'sentiment', 'p_pos', 'p_neg', 'predicted_sentiment']

Load dataset

First thing's first, let us load the data into a DataFrame. In this case, the training and testing data are already separated. We shuffle the data to ensure that we don't end up biasing our model.

Also, we will pickle these dataframe to make consecutive runs faster.

1def load_data(dir_type="train"):2 df = pd.DataFrame(columns=COLUMNS)3 for sub in subdirs:4 new_path = base_path + dir_type + "/" + sub + "/"5 for filename in glob.glob(new_path + "*.txt"):6 content = ''.join(open(filename, 'r').readlines())7 df = df.append(8 {"review": content, "sentiment": sub}, ignore_index=True)9 return df1011train_data = pd.DataFrame(columns=COLUMNS)12test_data = pd.DataFrame(columns=COLUMNS)1314train_data_changed = False15test_data_changed = False1617train_pickle = "./train.pkl"18test_pickle = "./test.pkl"1920if os.path.isfile(train_pickle) or train_data_changed:21 train_data = pd.read_pickle(train_pickle, compression="gzip")22else:23 train_data = load_data("train")24 print("Pickling training data")25 train_data.to_pickle(train_pickle, compression="gzip")2627if os.path.isfile(test_pickle) or test_data_changed:28 test_data = pd.read_pickle(test_pickle, compression="gzip")29else:30 test_data = load_data("test")31 print("Pickling testing data")32 test_data.to_pickle(test_pickle, compression="gzip")3334train_data = train_data.sample(frac=1).reset_index(drop=True)35test_data = test_data.sample(frac=1).reset_index(drop=True)3637print()38print("Training data:")39display(train_data)40print("Testing data:")41display(test_data)

1Pickling training data2Pickling testing data34Training data:

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | I gave this film 10 not because it is a superb... | pos | NaN | NaN | NaN |

| 1 | For a low budget project, the Film was a succe... | pos | NaN | NaN | NaN |

| 2 | I recently watched the first Guinea Pig film, ... | neg | NaN | NaN | NaN |

| 3 | The movie itself is so pathetic. It portrayed ... | neg | NaN | NaN | NaN |

| 4 | The Second Renaissance, part 1 let's us show h... | neg | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... |

| 24995 | A brilliant chess player attends a tournament ... | pos | NaN | NaN | NaN |

| 24996 | The first time I saw this, I didn't laugh too ... | pos | NaN | NaN | NaN |

| 24997 | Highly enjoyable, very imaginative, and filmic... | pos | NaN | NaN | NaN |

| 24998 | After Life is a Miracle, I did not expect much... | neg | NaN | NaN | NaN |

| 24999 | I was worried that my daughter might get the w... | pos | NaN | NaN | NaN |

25000 rows × 5 columns

1Testing data:

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | The movie was awful. The production company sh... | neg | NaN | NaN | NaN |

| 1 | eXistenZ was a good film, at the first I was w... | pos | NaN | NaN | NaN |

| 2 | As it turns out, Chris Farley and David Spade ... | pos | NaN | NaN | NaN |

| 3 | The bipolarity of this movie is maddening. One... | neg | NaN | NaN | NaN |

| 4 | This is more than just an adaptation of Bond: ... | neg | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... |

| 24995 | This is,in short,the TV comedy series with the... | pos | NaN | NaN | NaN |

| 24996 | I thought this movie was quite good. It was on... | pos | NaN | NaN | NaN |

| 24997 | Lucio Fulci, a director not exactly renowned f... | neg | NaN | NaN | NaN |

| 24998 | When I first saw this I thought bits of it wer... | neg | NaN | NaN | NaN |

| 24999 | Thought provoking, humbling depiction of the h... | pos | NaN | NaN | NaN |

25000 rows × 5 columns

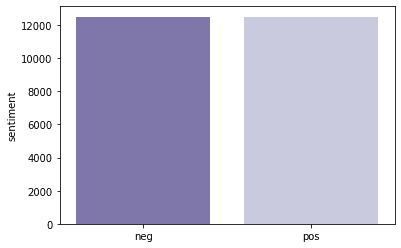

We will then see if the data is balanced i.e. we have an equal distribution of datapoints for all the classes. This also prevents our model from developing a bias during training.

1sns.barplot(train_data['sentiment'].value_counts().index,2 train_data['sentiment'].value_counts(), palette='Purples_r')3plt.show()

Data Cleaning

This dataset in particular is perfectly balanced, as all things should be. Now, since we have all the data loaded, we need to clean it.

There are numerous methods that we can use to clean data and here we will implement some of them. Most of the cleaning depends on the dataset at hand so make sure you explore the dataset before you begin cleaning.

To start off, we will do the following:

- Ensure all text is in lowercase

- Remove unncessary whitespaces

- Remove HTML tags

- Remove URLs

- Remove punctuation

- Remove other types of spaces such as newlines and tab spaces

- Remove words that have numbers between them (usually usernames)

1def clean_text(text):2 text = text.lower().strip()3 text = " ".join([w for w in text.split() if len(w) > 2])4 text = re.sub('\[.*?\]', '', text)5 text = re.sub('https?://\S+|www\.\S+', '', text)6 text = re.sub('<.*?>+', '', text)7 text = re.sub('[%s]' % re.escape(string.punctuation), '', text)8 text = re.sub('\n', '', text)9 text = re.sub('\w*\d\w*', '', text)10 return text111213train_data['review'] = train_data['review'].apply(clean_text)14test_data['review'] = test_data['review'].apply(clean_text)1516print("Cleaned data:")17display(train_data.head())18display(test_data.head())

1Cleaned data:

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | gave this film not because superbly consistent... | pos | NaN | NaN | NaN |

| 1 | for low budget project the film was success th... | pos | NaN | NaN | NaN |

| 2 | recently watched the first guinea pig film the... | neg | NaN | NaN | NaN |

| 3 | the movie itself pathetic portrayed deaf peopl... | neg | NaN | NaN | NaN |

| 4 | the second renaissance part lets show how the ... | neg | NaN | NaN | NaN |

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | the movie was awful the production company sho... | neg | NaN | NaN | NaN |

| 1 | existenz was good film the first was wondering... | pos | NaN | NaN | NaN |

| 2 | turns out chris farley and david spade only ma... | pos | NaN | NaN | NaN |

| 3 | the bipolarity this movie maddening one moment... | neg | NaN | NaN | NaN |

| 4 | this more than just adaptation bond its plain ... | neg | NaN | NaN | NaN |

Data exploration using WordCloud

Now that we've performed some basic cleaning, which is generally the same for most texual datasets, we need to perform some dataset specific cleaning now.

Most textual data in datasets is often considered "noise" as they are beneficial for humans and help them understand context. But, since computers can't do that, not to the extent we'd like, at least, we are better off removing this noise.

This often helps us clearly see relationships and trends in the dataset and reduces unnecessary computation.

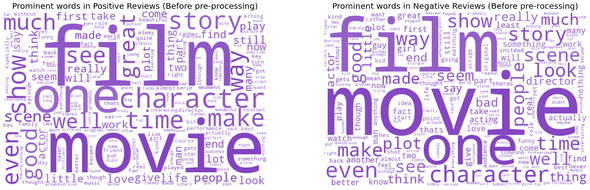

Before we do that, let's see what kinds of words are dominating each of the datasets. To do that, we generate a WordCloud for both the Positive and the Negative Reviews.

1def purple_color_func(word, font_size, position, orientation, random_state=None, **kwargs):2 return "hsl(270, 50%%, %d%%)" % random.randint(50, 60)345pos_reviews = train_data[train_data['sentiment']6 == "pos"]['review'].copy(deep=True)7neg_reviews = train_data[train_data['sentiment']8 == "neg"]['review'].copy(deep=True)91011fig, (ax1, ax2) = plt.subplots(1, 2, figsize=[26, 8])12wordcloud1 = WordCloud(background_color='white',13 color_func=purple_color_func,14 random_state=3,15 width=600,16 height=400).generate(" ".join(pos_reviews))17ax1.imshow(wordcloud1)18ax1.axis('off')19ax1.set_title('Prominent words in Positive Reviews (Before pre-processing)', fontsize=20)2021wordcloud2 = WordCloud(background_color='white',22 color_func=purple_color_func,23 random_state=3,24 width=600,25 height=400).generate(" ".join(neg_reviews))26ax2.imshow(wordcloud2)27ax2.axis('off')28ax2.set_title('Prominent words in Negative Reviews (Before pre-rocessing)', fontsize=20)29plt.show()

Tokenization of Reviews

We see that the words like film, movie, one, character, show, etc. are dominating the reviews. Most of these words don't contribute to the sentiment but are present in the review for structre.

We can also see that there are several other words such as stopwords that don't really contribute much to the reviews as well.

We will remove all these words to reduce the noise and surface the important words that contribute to the sentiment of the review.

Before we do that, we need to tokenize the data. Each review is split into a list of words which we can easily work on.

1tokenizer = nltk.tokenize.RegexpTokenizer(r'\w+')23train_data['review'] = train_data['review'].apply(tokenizer.tokenize)4test_data['review'] = test_data['review'].apply(tokenizer.tokenize)56print("Tokenized reviews:")7display(train_data.head())8display(test_data.head())

1Tokenized reviews:

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | [gave, this, film, not, because, superbly, con... | pos | NaN | NaN | NaN |

| 1 | [for, low, budget, project, the, film, was, su... | pos | NaN | NaN | NaN |

| 2 | [recently, watched, the, first, guinea, pig, f... | neg | NaN | NaN | NaN |

| 3 | [the, movie, itself, pathetic, portrayed, deaf... | neg | NaN | NaN | NaN |

| 4 | [the, second, renaissance, part, lets, show, h... | neg | NaN | NaN | NaN |

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | [the, movie, was, awful, the, production, comp... | neg | NaN | NaN | NaN |

| 1 | [existenz, was, good, film, the, first, was, w... | pos | NaN | NaN | NaN |

| 2 | [turns, out, chris, farley, and, david, spade,... | pos | NaN | NaN | NaN |

| 3 | [the, bipolarity, this, movie, maddening, one,... | neg | NaN | NaN | NaN |

| 4 | [this, more, than, just, adaptation, bond, its... | neg | NaN | NaN | NaN |

Stopwords Removal

Now that the data is tokenized, we can remove the stopwords.

I've also included a list of other words that I feel don't contribute to the positive or negative sentiment based on the WordCloud we just generated.

1def remove_stopwords(text):2 words = [3 w for w in text if w not in stop_words and w in words_corpus or not w.isalpha()]4 words = list(filter(lambda word: words.count(word) >= 2, set(words)))5 return words678words_corpus = set(nltk.corpus.words.words())9stop_words = set(stopwords.words('english'))1011remove = ['movie', 'film', 'one', 'made', 'many', 'time', 'story', 'character', 'still', 'seen', 'picture', 'people', 'see', 'never', 'come',12 'even', 'way', 'plot', 'house', 'horror', 'think', 'make', 'first', 'scene', 'director', 'two', 'show', 'become', 'brother', 'che', 'got', 'ago']13stop_words = stop_words.union(remove)1415print("Removing {} stopwords from text".format(len(stop_words)))16print()1718train_data['review'] = train_data['review'].apply(remove_stopwords)19test_data['review'] = test_data['review'].apply(remove_stopwords)2021print("After removing stopwords:")22display(train_data.head())23display(test_data.head())

1Removing 211 stopwords from text23After removing stopwords:

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | [] | pos | NaN | NaN | NaN |

| 1 | [could, project, low, budget] | pos | NaN | NaN | NaN |

| 2 | [truth, guinea, look, away, pig, might, didnt,... | neg | NaN | NaN | NaN |

| 3 | [like, boring, hearing, deaf, pathetic] | neg | NaN | NaN | NaN |

| 4 | [right, violent, silly, renaissance, second, p... | neg | NaN | NaN | NaN |

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | [electricity, send, would] | neg | NaN | NaN | NaN |

| 1 | [going, good] | pos | NaN | NaN | NaN |

| 2 | [company, truly, auto, tommy, spade, big] | pos | NaN | NaN | NaN |

| 3 | [hero, star, revolutionary, basically, point] | neg | NaN | NaN | NaN |

| 4 | [] | neg | NaN | NaN | NaN |

Lemmatization

Next, we lemmatize the data to ensure that reviews don't contain different forms of the same word.

This helps us reduce unnecessary computation.

1def lemmatize_data(text):2 return [lemmatizer.lemmatize(w) for w in text]345lemmatizer = nltk.stem.WordNetLemmatizer()67train_data['review'] = train_data['review'].apply(lemmatize_data)8test_data['review'] = test_data['review'].apply(lemmatize_data)910display(train_data.head())11display(test_data.head())

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | [] | pos | NaN | NaN | NaN |

| 1 | [could, project, low, budget] | pos | NaN | NaN | NaN |

| 2 | [truth, guinea, look, away, pig, might, didnt,... | neg | NaN | NaN | NaN |

| 3 | [like, boring, hearing, deaf, pathetic] | neg | NaN | NaN | NaN |

| 4 | [right, violent, silly, renaissance, second, p... | neg | NaN | NaN | NaN |

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 0 | [electricity, send, would] | neg | NaN | NaN | NaN |

| 1 | [going, good] | pos | NaN | NaN | NaN |

| 2 | [company, truly, auto, tommy, spade, big] | pos | NaN | NaN | NaN |

| 3 | [hero, star, revolutionary, basically, point] | neg | NaN | NaN | NaN |

| 4 | [] | neg | NaN | NaN | NaN |

Removing rarely occuring words

In order to further reduce computation, we will remove rarely occuring words. Since their occurence is rare, their contribution to the overall sentiment is miniscule.

Due to this, we will remove words that occur less than 5 times in the overall dataset.

1def remove_rare_words(text, rare_words):2 removed_rare_words = list(set(text) - set(rare_words))3 return removed_rare_words456def find_remove_rare_words(dataframe):7 exploded = dataframe.explode('review')8 word_counts = exploded.review.value_counts(ascending=True)9 rare_words = word_counts[word_counts <= 5].index.to_list()1011 dataframe['review'] = dataframe['review'].apply(12 lambda x: remove_rare_words(x, rare_words))1314 print("Before:", exploded.shape[0], "\nAfter:", dataframe.explode(15 'review').shape[0])16 return dataframe171819print("Removing rare words for training data")20train_data = find_remove_rare_words(train_data)21print()2223print("Removing rare words for test data")24test_data = find_remove_rare_words(test_data)

1Removing rare words for training data2Before: 1962133After: 18412545Removing rare words for test data6Before: 1917397After: 179867

Prominent words in reviews after pre-processing

That's pretty much all the cleaning we are going to do on this dataset. Let's join back all the words in the reviews and have a quick glance at the final WordCloud.

1def combine_text(list_of_text):2 combined_text = ' '.join(token for token in list_of_text)3 return combined_text456pos_reviews = train_data[train_data['sentiment']7 == "pos"]['review'].copy(deep=True).apply(combine_text)8neg_reviews = train_data[train_data['sentiment']9 == "neg"]['review'].copy(deep=True).apply(combine_text)101112fig, (ax1, ax2) = plt.subplots(1, 2, figsize=[26, 8])13wordcloud1 = WordCloud(background_color='white',14 color_func=purple_color_func,15 random_state=3,16 width=600,17 height=400).generate(" ".join(pos_reviews))18ax1.imshow(wordcloud1)19ax1.axis('off')20ax1.set_title('Prominent words in Positive Reviews (After pre-processing)', fontsize=20)2122wordcloud2 = WordCloud(background_color='white',23 color_func=purple_color_func,24 random_state=3,25 width=600,26 height=400).generate(" ".join(neg_reviews))27ax2.imshow(wordcloud2)28ax2.axis('off')29ax2.set_title('Prominent words in Negative Reviews (After pre-processing)', fontsize=20)30plt.show()

We can see that a lot of the noise has been cleared now. Most of the important words that contribute to the positive or negative sentiments are dominating which was our goal.

Also, after cleaning, there might be reviews that have no words remaining in them mainly due to them not contributing much. It's best to just drop these rows and reduce unnecessary computation.

1def drop_empty_reviews(df):2 df = df.drop(df[~df.review.astype(bool)].index)3 return df456train_data = drop_empty_reviews(train_data)7test_data = drop_empty_reviews(test_data)

Splitting training data

Now, we split the training dataset to train and dev. We will be using the dev data for testing until we decide on optimal hyperparameters to use. This helps reduce bias as the test data remains untouched.

1rows = 100002split = 0.834mid = int(np.floor_divide(rows, 1/split))56sample_train_data = train_data[:mid].copy(deep=True)7sample_dev_data = train_data[mid:mid + (rows - mid)].copy(deep=True)8sample_test_data = test_data[:rows].copy(deep=True)910print("No. of reviews in training dataset:", sample_train_data.shape[0])11print("No. of reviews in dev dataset:", sample_dev_data.shape[0])12print("No. of reviews in test dataset:", sample_test_data.shape[0])

1No. of reviews in training dataset: 80002No. of reviews in dev dataset: 20003No. of reviews in test dataset: 10000

Implementing Naive Bayes

Since the data is ready, we now move to the main part. We will use a Naive Bayes classifier to predict the sentiment of each review.

Since we will be using the dev data, we already have the correct sentiment in the dataset which we will use to calculate the accuracy of our predictions.

The Naive Bayes classifier uses a modified version of Bayes Theorem to achieve this.

1# p_w_sent = # of time w occurs in df[sent] / # of docs in df[sent] -> likelihood2# p_sent = # of docs in df[sent] / # of total docs -> class prior prob34# pos_prob = p_sent_pos * (p_w1_sent_pos * p_w2_sent_pos * ...)5# neg_prob = p_sent_neg * (p_w1_sent_neg * p_w2_sent_neg * ...)67# p_sent_w = max(pos_prob,neg_prob) -> posterior prob89def populate_word_probabilities_dict(train):10 word_counts = {}1112 docs_w_pos_sent = train[train.sentiment == "pos"]13 no_of_docs_w_pos_sent = docs_w_pos_sent.shape[0]1415 docs_w_neg_sent = train[train.sentiment == "neg"]16 no_of_docs_w_neg_sent = docs_w_neg_sent.shape[0]1718 for row in train.itertuples():19 review = row.review2021 for word in review:22 p_word_w_pos_sent = None23 p_word_w_neg_sent = None2425 if word in word_counts.keys():26 p_word_w_pos_sent = word_counts[word]['p_pos']27 p_word_w_neg_sent = word_counts[word]['p_neg']28 else:29 no_of_docs_w_word_pos_sent = docs_w_pos_sent[docs_w_pos_sent.review.apply(lambda x: bool(set(x) & {word}))].shape[0]30 no_of_docs_w_word_neg_sent = docs_w_neg_sent[docs_w_neg_sent.review.apply(lambda x: bool(set(x) & {word}))].shape[0]3132 p_word_w_pos_sent = round(no_of_docs_w_word_pos_sent / no_of_docs_w_pos_sent, 4)33 p_word_w_neg_sent = round(no_of_docs_w_word_neg_sent / no_of_docs_w_neg_sent, 4)3435 word_counts[word] = {'p_pos': p_word_w_pos_sent, 'p_neg': p_word_w_neg_sent}36 return word_counts3738def nb_predict(train, test, smoothing=False):39 print("Processing")4041 train_word_probs = populate_word_probabilities_dict(train)4243 correct = 044 smoothing_param = 04546 if smoothing:47 smoothing_param = 1 / \48 sample_train_data.explode('review').review.shape[0]4950 for row in test.itertuples():51 review = row.review52 pos_prob = 1.053 neg_prob = 1.05455 for word in review:56 p_word_w_pos_sent = 0.057 p_word_w_neg_sent = 0.05859 if word in train_word_probs.keys():60 probs_word = train_word_probs[word]61 p_word_w_pos_sent = probs_word['p_pos']62 p_word_w_neg_sent = probs_word['p_neg']6364 pos_prob = pos_prob * (p_word_w_pos_sent + smoothing_param)65 neg_prob = neg_prob * (p_word_w_neg_sent + smoothing_param)6667 total_train_docs = train.shape[0]6869 no_of_docs_w_pos_sent = train[train.sentiment == "pos"].shape[0]70 no_of_docs_w_neg_sent = train[train.sentiment == "neg"].shape[0]7172 p_pos_sent = round(no_of_docs_w_pos_sent / total_train_docs, 4)73 p_neg_sent = round(no_of_docs_w_neg_sent / total_train_docs, 4)7475 pos_prob = p_pos_sent * pos_prob76 neg_prob = p_neg_sent * neg_prob7778 predicted_sent = 07980 if pos_prob > neg_prob:81 predicted_sent = "pos"82 elif pos_prob < neg_prob:83 predicted_sent = "neg"8485 if row.sentiment == predicted_sent:86 correct += 18788 test.at[row.Index, 'p_pos'] = pos_prob89 test.at[row.Index, 'p_neg'] = neg_prob90 test.at[row.Index, 'predicted_sentiment'] = predicted_sent9192 accuracy = round(correct / test.shape[0] * 100, 2)93 print("Accuracy: {}%".format(accuracy))94 return9596print("Predicting setiment of review using Naive Bayes Classifer")97print()98nb_predict(sample_train_data, sample_dev_data)99100display(sample_dev_data.head(10))

1Predicting setiment of review using Naive Bayes Classifer23Processing4Accuracy: 65.7%

| review | sentiment | p_pos | p_neg | predicted_sentiment | |

|---|---|---|---|---|---|

| 8621 | [police, final, like, hong, dog, emotional, qu... | pos | 0 | 0 | 0 |

| 8622 | [script, watching] | pos | 7.71513e-05 | 0.000357276 | neg |

| 8623 | [like, ship, mood, young, dog, three, life, bo... | pos | 0 | 1.50634e-49 | neg |

| 8624 | [fun, lot, there] | pos | 4.38243e-06 | 3.91419e-06 | pos |

| 8625 | [like, go, much, serial, couple, young, doesnt... | pos | 7.4e-27 | 2.98703e-27 | pos |

| 8626 | [peter, like, classic, dunne, bunch, fake] | pos | 2.84404e-16 | 5.16792e-15 | neg |

| 8627 | [primary, mention, love, boring, without, woul... | neg | 0 | 3.3792e-16 | neg |

| 8628 | [asylum, like, dean, didnt, power, episode, wh... | neg | 0 | 5.30595e-47 | neg |

| 8629 | [tunnel, office, didnt, take, space, doubt, pr... | neg | 6.1085e-17 | 2.25036e-17 | pos |

| 8630 | [wonderful, music, terrible] | pos | 1.36208e-07 | 1.06704e-07 | pos |

Cross-validation

Let's just ensure that this accuracy is consistent across multiple passes. To to this, we will implement a 5 fold cross validation where we split the training dataset into 5 chunks, use one of them for testing and the remaining for training.

To ensure consistency, we will use a different chunk every time we test.

1def cross_validation(train, k, smoothing=False):2 dev = 1/k34 for i in range(1, k + 1):5 dev_chunk = train.sample(6 frac=dev, replace=False, random_state=i).copy(deep=True)7 train_chunk = train.drop(dev_chunk.index, axis=0).copy(deep=True)89 if smoothing:10 print("Cross-validation Pass", i, "with smoothing")11 else:12 print("Cross-validation Pass", i)1314 nb_predict(train_chunk, dev_chunk, smoothing)1516 print()17 return181920cross_validation(sample_train_data, 5)

1Cross-validation Pass 12Processing3Accuracy: 64.19%45Cross-validation Pass 26Processing7Accuracy: 63.12%89Cross-validation Pass 310Processing11Accuracy: 62.38%1213Cross-validation Pass 414Processing15Accuracy: 62.19%1617Cross-validation Pass 518Processing19Accuracy: 64.62%

Smoothing

While this accuracy isn't bad, I'm sure we can try to make it better. As you can see, there are a few reviews that have been classified incorrectly and some of them are classified as "0".

This is the zero-frequency problem. This happens when a word that is not observed in training appears from the test dataset.

This issue will always crop up irrespective of how clean the dataset is.

Mainly due to the fact that we haven't calculated the probabilities of every word in the English language. We have just calculated for the ones in the training set.

To mitigate this issue, we can implement something called "Smoothing". Using this, even when a new word is introduced into the dataset, the sentiment of the document doesn't become zero.

We will be using something called "Laplace Smoothing" or "Add-one smoothing"

1print("Predicting setiment of review using Naive Bayes Classifer with smoothing")2print()34nb_predict(sample_train_data, sample_dev_data, smoothing=True)

1Predicting setiment of review using Naive Bayes Classifer with smoothing23Processing4Accuracy: 71.0%

Cross-validation with smoothing

As we can see, the accuracy got better with smoothing. It's not by a lot but the difference is tangible.

We will verify the consistency of these results using Cross Validation with smoothing enabled.

1cross_validation(sample_train_data, 5, smoothing=True)

1Cross-validation Pass 1 with smoothing2Processing3Accuracy: 69.94%45Cross-validation Pass 2 with smoothing6Processing7Accuracy: 70.88%89Cross-validation Pass 3 with smoothing10Processing11Accuracy: 69.31%1213Cross-validation Pass 4 with smoothing14Processing15Accuracy: 69.12%1617Cross-validation Pass 5 with smoothing18Processing19Accuracy: 71.75%

Top 10 prominent words

Here, we explore which are the top 10 most prominent words that lead to a positive or a negative prediction

1accurate_predictions = sample_dev_data[(sample_dev_data.sentiment == sample_dev_data.predicted_sentiment) & (sample_dev_data.review.str.len() == 1)]23pos_preds = accurate_predictions[accurate_predictions.sentiment == "pos"].sort_values(by=['p_pos'], ascending=False)4top_ten_pos = pos_preds.explode('review').review.unique()[:10].tolist()56print("Top 10 words that predicts a positive review:")7for i, word in enumerate(top_ten_pos):8 print("{}. {}".format(i + 1, word))9print()1011neg_preds = accurate_predictions[accurate_predictions.sentiment == "neg"].sort_values(by=['p_neg'], ascending=False)12top_ten_neg = neg_preds.explode('review').review.unique()[:10].tolist()1314print("Top 10 words that predicts a negative review:")15for i, word in enumerate(top_ten_neg):16 print("{}. {}".format(i + 1, word))

1Top 10 words that predicts a positive review:21. good32. great43. love54. best65. little76. back87. real98. though109. family1110. role1213Top 10 words that predicts a negative review:141. like152. bad163. would174. get185. dont196. much207. could218. watch229. acting2310. know

Final output on Test data with smoothing enabled

Now, it is clear that the classifier is performing well with smoothing enabled, thus, we will enable smoothing and calculate the final accuracy on the untouched test data. This should be our final answer.

The final accuracy is generally expected to be lower than the training accuracy mainly due to the removal of the biases we may have developed while training our model.

1nb_predict(train_data, test_data, smoothing=True)

1Processing2Accuracy: 71.24%

The Jupyter Notebook can be downloaded from here and is also available on Kaggle

References:

Large Movie Reviews Dataset - Stanford AI

Probability Smoothing - Lazy Programmer